Data science

Data science: is the field of study that combines domain expertise, programming skills, and knowledge of mathematics and statistics to extract meaningful insights from ocean of data.

Web scraping can be a solution to speed up the data collection process.

Instead of looking at the job site every day, you can use Python to help automate the repetitive parts of your job search.

- Web scraping example using Python [extract title, head and h1 text from a website]

from bs4 import BeautifulSoup

import requests

url = "https://flipkart.com"

req = requests.get(url)

soup = BeautifulSoup(req.text, "lxml") #lxml=html/xml html.parser

print(soup.title)

print(soup.head)

print(soup.h1)

file1=open('test.txt', 'w')

file1.write(str(soup.h1))

file1.close()

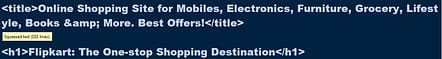

Output of the above program is :

2. Web scrap example [check web scraping is allowed or not, if status code other than 200 then site is not allowed for scraping!]

import requests

from bs4 import BeautifulSoup

r=requests.get("https://www.amazon.in")

print(r.status_code)

output:

200

3. Retrieve live Covid-19 data using web scraping and store in .csv format locally

import bs4

import pandas as pd

import requests

url = 'https://www.worldometers.info/coronavirus/country/india/'

result = requests.get(url)

soup = bs4.BeautifulSoup(result.text,'lxml')

#search for maincounter-number class

cases = soup.find_all('div' ,class_= 'maincounter-number')

# to store data

data = []

for i in cases:

span = i.find('span')

data.append(span.string)

print('Cases', '\tDeaths', '\tRecovered')

print(data, end='\t')

#dataframe to visualize

df = pd.DataFrame({"CoronaData": data})

#creating coloumns

df.index = ['TotalCases', ' Deaths', 'Recovered']

# storing into Excel

df.to_csv('Corona_Data.csv')

Output of the above code is :

[ Cases / Deaths / Recovered ]

[‘9,857,380 ‘, ‘143,055’, ‘9,357,464’]